Research Interests¶

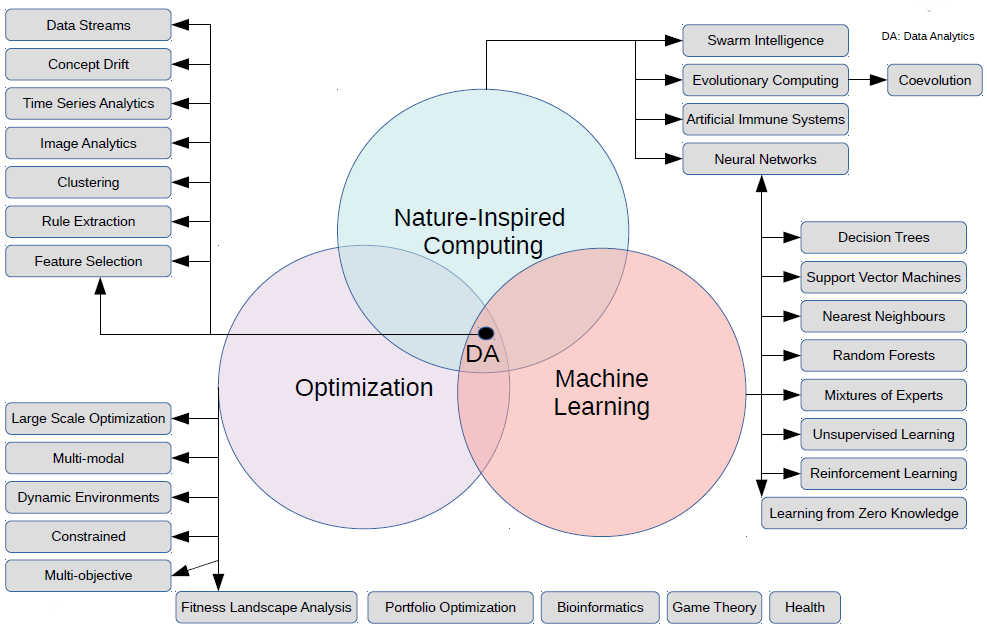

My research interests, and that of my research group, are diverse within the broad field of artificial intelligence. However, our focus is mostly on theoretical and applied research in computational intelligence, covering the paradigms of swarm intelligence, evolutionary computation, artificial neural networks, and hyper-heuristics. In addition, we conduct research in fitness landscape analysis, machine learning, and data analytics. Our search focuses mostly on the development of new algorithms, the improvement of existing algorithms, and analysis of the performance of algorithms theoretically and empirically. While not a strong focus of our research, we also do research in deep learning and applied research, where the algorithms developed are used to solve real-world problems.

As part of our research into the development of new and improved algorithms, we have developed, and we continue to further develop, a library of computational intelligence tools.

The remainder of this pages provides a summary of our current research with reference to our main focus areas. To see what we have produced in our past research, refer to the publications and past postgraduate student pages.

Swarm Intelligence¶

The bulk of our research is into the development swarm-based algorithms to solve optimization problems. Here the focus is mostly on particle swarm optimization (PSO), with some research also done on ant algorithms, bacterial foraging, amongst other swarm-based approaches. We have also done some work in swarm robotics.

Currently our research in PSO focuses on gaining a better understanding of the algorithm's search behavior to include theoretical stability analysis, analysis of control parameter importance and sensitivity, and empirical analyses. Work is done to gain a better understanding of the performance different PSO algorithms on different fitness landscapes, using formal fitness landscape analyses. With reference to algorithm development, our focus is currently on the development of efficient PSO algorithms to solve

- constrained,

- large-scale,

- dynamic,

- multi- and many-objective,

- dynamic multi- and many-objective, and

- dynamically constrained

optimization problems. In addition, PSO algorithms are developed to find multiple optima for static optimization problems and to track multiple optima for dynamic optimization problems.

We are also doing research into the development of PSO algorithms that can be used to solve optimization problems where the solutions can be represented using sets. Competitive coevolutionary PSO algorithms are developed to solve static and dynamic optimization problems, and training of neural networks from zero-knowledge. Formal analyses of the relative importance of control parameters of the different PSO algorithm are conducted, working towards more efficient self-adaptive PSO algorithms.

Other applications for which we develop PSO algorithms include

- Gaussian micture models for clustering stationary and non-stationary data

- neural network training, specifically under the presence of concept drift

- portfolio optimization

- community detection in social networks

- trading on the stock market

- polynomial regression for static and dynamic data

- to train neural network ensembles

- rule induction

- training support vector machines, and scaling support vector machines to very large data sets

Evolutionary Computation¶

Our research in evolutionary computation focuses on differential evolution (DE), genetic programming (GP), coevolution (CoE), and cultural algorithms (CAs). Current research focuses on

- the development of GP approaches to induce classification trees and model trees for non-stationary data

- the development of diversity measures for GP populations

- an analysis of the effect of different population sizes on the search behavior of DE

- determining links between DE algorithm performance, the DE control parameters, and fitness landscape characteristics

- determining optimal DE population sizes as a function of DE control parameters and fitness landscape characteristics

- cooperative coevolutionary approaches to large-scale optimization

- competitive coevolutionary approaches to solve static and dynamic optimization problems, and to training neural networks in an unsupervised fashion

Artificial Neural Networks¶

While our research into neural networks in the past was quite diverse, the current focus of our neural networks research is to develop meta-heuristic, specifically PSO, approaches to train neural networks on non-stationary data, and to gain a better understanding of the search landscapes produced by neural networks. Below is a list of the current research directions for this research focus area:

- an in-depth analysis of the hidden unit saturation behavior of PSO when used to train neural networks

- development of hidden unit saturation measures

- to develop efficient approaches to training neural networks under the presence of concept drift

- to investigate how efficient PSO algorithms are when training regularized neural networks on non-stationary data

- fitness landscape analysis of neural network error surfaces, and the impact of different parameters of neural networks on the fitness landscape

- an analysis of the impact of mini-batch training, incremental learning and active learning on the fitness landscape

- the development of multimodal PSO algorithms to produce neural network ensembles

- an analysis of the impact of bagging approaches on the fitness landscapes of the individual networks in an ensemble

Fitness Landscape Analysis¶

Fitness landscape analysis has become an important vehicle for understanding the complexity of the search landscapes to which optimization problems are applied, and how algorithm performance link to these landscape characteristics. Our current research focuses on

- gaining a better understanding of neural network error landscapes, and specifically for non-stationary data

- gaining a better understanding of the landscapes produced for the feature selection problem

- devloping local optimal networks to understand discrete-valued landscapes

- developing approaches to scale fitness landscape analysis to very large dimensional spaces

- analyses of the robustness and coverage of fitness landscapes

- analyses of the effect of different random walks on coverage and robustness

- developing alternative, more efficient random walks and sampling approaches

- analysing the fitness landscape characteristics of benchmark functions

- analysing the link between control parameter optimality and fitness landscape characteristics

- developing landscape-aware search approaches

Hyper-Heuristics¶

Our past research into hyper-heuristics has produced a selection hyper-heuristic framework for static optimization problems and scheduling problems. Our current research into hyper-heuristics focuses on expanding this frame work by developing

- selection hyper-heuristics to solve dynamic optimization problems

- selection hyper-heuristics to train neural networks

- new heuristic selection operators for selection hyper-heuristics

Machine Learning¶

Our machine learning research, in addition to the neural networks research above, focuses mainly on the development of meta-heuristic based approaches to machine learning and ensembles. Current research includes:

- the development of model tree forests

- an analysis of heterogeneous mixtures of experts

- using multimodal optimization meta-heursitcs to create ensembles, specifically neural network ensembles for static and non-stationary data

- genetic programming approaches to decision tree induction, including classification and model trees for both stationary and non-stationary data

- reinforcement learning approaches for stock market prediction

Optimization¶

Our research in optimization is specifically with reference to meta-heuristics, where the focus is on solving different classes of optimization problems, including

- unconstrained and constrained optimization problems

- static and dynamic optimization problems

- single-objective, multi-objective, and many-objective optimization problems

- large-scale optimization problems

- optimization problems where the dimensionality varies over time

- problems where it is required to find all, or at least as many as possible solutions

- problems with decision variable dependencies

- continuous-valued and discrete-valued problems

- problems that are combinations of the above

Data Analytics¶

The focus of our data analytics research is in the development and application of machine learning and meta-heurstic approaches to data understanding. Here we have a strong fucus on data clustering algorithms for stationary and non-stationary data, time series clustering and analytics, and image analytics.

Applications¶

While our research is mainly directed towards the development of new algorithms, the improvement of existing algorithms, and gaining a better understanding of algorithm behavior and problem complexity, we also do conduct research in the application of these algorithms to solve real-world problems. Below is a list of some of the current applicatins that we are working on:

- portfolio optimization

- predicitve models for trading

- analysis of panoramic dental sephalograms

- predictive models to predict the aging potential of South African red wines

- data clustering

- time series clustering

- time series analytics, specifically in the product space

- train scheduling

- game learning

Computational Intelligence Library¶

Our research has resulted in the development of a number of libraries:

- CIlib, which is a generic framework for the development of optimization algorithms, implemented in Scala. See Computational Intelligence Library for more information. For more information about CIlib, please join the CIlib chat.

- A library of benchmark functions, also implemented in Scala.

- A library containing random walks and fitness landscape analysis measures, also in Scala.